Introduction

Giant Language Fashions are recognized for his or her text-generation capabilities. They’re educated with hundreds of thousands of tokens in the course of the pre-training interval. It will assist the big language fashions perceive English textual content and generate significant full tokens in the course of the era interval. One of many different widespread duties in Pure Language Processing is the Sequence Classification Activity. On this, we classify the given sequence into completely different classes. This may be naively performed with Giant Language Fashions by Immediate Engineering. However this may solely typically work. As an alternative, we will tweak the Giant Language Mannequin to output a set of possibilities for every class for a given enter. This information will present how you can prepare such LLM and work with the finetune Llama 3 mannequin.

Studying Aims

- Perceive the fundamentals of Giant Language Fashions and their purposes

- Be taught to finetune Llama 3 mannequin for sequence classification duties

- Discover important libraries for working with LLMs in HuggingFace

- Acquire expertise in loading and preprocessing datasets for LLM coaching

- Customise coaching processes utilizing class weights and a customized coach class

This text was printed as part of the Knowledge Science Blogathon.

Finetune Llama 3- Importing Libraries

For this information, we will probably be working in Kaggle. Step one could be to obtain the mandatory libraries that we are going to require to finetune Llama 3 for Sequence Classification. Allow us to run the code beneath:

!pip set up -q transformers speed up trl bitsandbytes datasets consider huggingface-cli

!pip set up -q peft scikit-learnObtain Libraries

We begin by downloading the next libraries:

- Transformers: It is a library from HuggingFace, which we will use to obtain, create purposes with, and fine-tune Deep Studying fashions, together with giant language fashions.

- Speed up: That is once more a library from HuggingFace that hastens the inference velocity of the big language fashions being run on the GPU.

- Trl: It’s a Python Bundle from HuggingFace, which we will use to fine-tune the Deep Studying Fashions out there on the HuggingFace hub. With this TRL library, we will even fine-tune the big language fashions.

- bitsandbytes: LLMs are very excessive in reminiscence, so we will straight work with them in Low RAM GPUs. We quantize the Giant Language Fashions to a decrease precision so we will match them within the GPU, and for this, we require the bitsandbytes library.

- Datasets: It is a Python library from HuggingFace, with which we will obtain varied open-source datasets belonging to completely different Deep Studying and Machine Studying Classes, together with Picture Classification, Textual content Era, Textual content Summarization, and extra.

- Consider: We are going to use this library to guage our mannequin earlier than and after coaching.

- Huggingface-cli: That is required as a result of we should log in to HuggingFace to work with the Llama3 mannequin.

- peft: With the GPU we’re working with, it’s inconceivable to coach all of the parameters of the big language fashions. As an alternative, we will solely prepare a subset of those parameters, which might be performed utilizing the peft library.

- Scikit-learn: This library comprises many various machine studying and deep studying instruments. We are going to use this library for the error metrics to check the Giant Language Mannequin earlier than and after coaching.

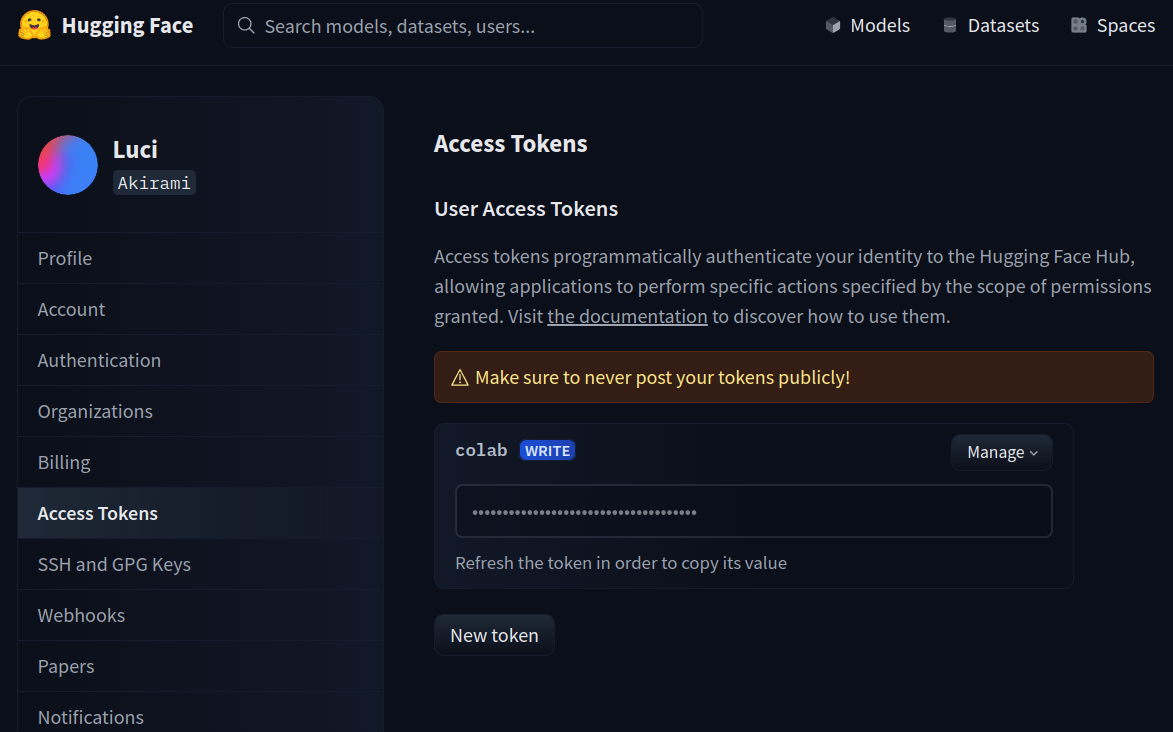

After that, attempt logging in to the HuggingFace hub. For this, we are going to work with the huggingface-cli software. The code for this may be seen beneath:

!huggingface-cli login --token $YOUR_HF_TOKENCode Clarification

Right here, we name the login possibility for the huggingface-cli with the extra – token possibility. Right here we offer our HuggingFace token to log into the HuggingFace. To get the HuggingFace token, go to this hyperlink. As proven within the pic beneath, you possibly can create an entry token by clicking on New Token or utilizing an present token. Simply copy that token and paste it within the place YOUR_HF_TOKEN.

Let’s Load the Dataset

Subsequent, we load the dataset for coaching. For this, we work with the beneath code:

from datasets import load_dataset

dataset = load_dataset("ag_news")- We begin by importing the load_dataset operate from the datasets library

- Then we name the load_dataset operate with the dataset identify “ag_news”

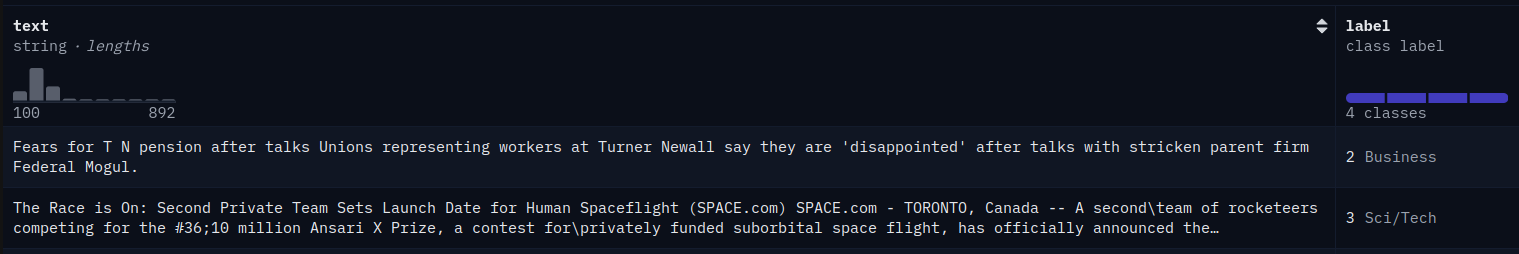

Working it will obtain the ag_news dataset to the dataset variable. The ag_news dataset appears to be like just like the beneath pic:

It’s a information classification dataset. The information is assessed into completely different classes, reminiscent of world, sports activities, enterprise, and sci/tech. Now, allow us to see if the examples for every class are represented in equal numbers or if there’s any class imbalance.

import pandas as pd

df = pd.DataFrame(dataset['train'])

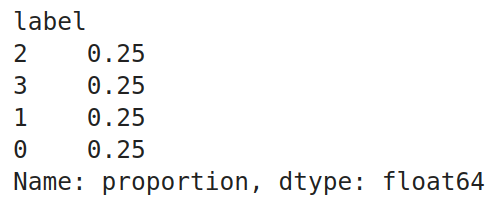

df.label.value_counts(normalize=True)

Code Clarification

- Right here, we begin by importing the pandas library

- The ag_news dataset comprises each the coaching set and a take a look at set. And the dataset variable of kind DatasetDict. For ease of use, we convert it into pandas DataFrame

- Then we name the value_counts() operate on the “label” column of the dataframe with normalize set to True

Working this code produced the next output. We are able to test that each one 4 labels have an equal proportion, which suggests that every class has an equal variety of examples within the dataset. The dataset is big, so we solely want part of it. So, we pattern some knowledge from this dataframe with the next code:

# Splitting the dataframe into 4 separate dataframes based mostly on the labels

label_1_df = df[df['label'] == 0]

label_2_df = df[df['label'] == 1]

label_3_df = df[df['label'] == 2]

label_4_df = df[df['label'] == 3]

# Shuffle every label dataframe

label_1_df = label_1_df.pattern(frac=1).reset_index(drop=True)

label_2_df = label_2_df.pattern(frac=1).reset_index(drop=True)

label_3_df = label_3_df.pattern(frac=1).reset_index(drop=True)

label_4_df = label_4_df.pattern(frac=1).reset_index(drop=True)

# Splitting every label dataframe into prepare, take a look at, and validation units

label_1_train = label_1_df.iloc[:2000]

label_1_test = label_1_df.iloc[2000:2500]

label_1_val = label_1_df.iloc[2500:3000]

label_2_train = label_2_df.iloc[:2000]

label_2_test = label_2_df.iloc[2000:2500]

label_2_val = label_2_df.iloc[2500:3000]

label_3_train = label_3_df.iloc[:2000]

label_3_test = label_3_df.iloc[2000:2500]

label_3_val = label_3_df.iloc[2500:3000]

label_4_train = label_4_df.iloc[:2000]

label_4_test = label_4_df.iloc[2000:2500]

label_4_val = label_4_df.iloc[2500:3000]

# Concatenating the splits again collectively

train_df = pd.concat([label_1_train, label_2_train, label_3_train, label_4_train])

test_df = pd.concat([label_1_test, label_2_test, label_3_test, label_4_test])

val_df = pd.concat([label_1_val, label_2_val, label_3_val, label_4_val])

# Shuffle the dataframes to make sure randomness

train_df = train_df.pattern(frac=1).reset_index(drop=True)

test_df = test_df.pattern(frac=1).reset_index(drop=True)

val_df = val_df.pattern(frac=1).reset_index(drop=True)- Our knowledge comprises 4 labels. So we create 4 dataframes the place every dataframe consists of a single label

- Then, we shuffle every of those labels by calling the pattern operate on them

- Then we cut up every of those label dataframes into 3 splits referred to as the prepare, take a look at, and legitimate dataframes. For prepare, we offer 2000 labels, and for testing and validation, we offer 500 every

- Now, we concat the coaching dataframes of all of the labels right into a single coaching dataframe by the pd.concat() operate

- We do the identical factor even for the take a look at and validation dataframes

- Lastly, we shuffle the train_df, test_df, and valid_df another time to make sure randomness in them

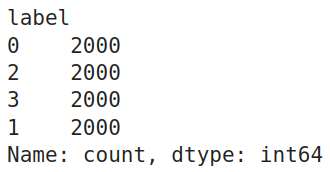

Checking the Worth Counts

For affirmation, allow us to test the worth counts of every label within the coaching dataframe. The code for this will probably be:

train_df.label.value_counts()

Pandas DataFrames to DatasetDict

So, we will test that the coaching dataframe has equal examples for every of the 4 labels. Earlier than sending them to coaching, we have to convert these Pandas DataFrames to DatasetDict, which the HuggingFace coaching library accepts. For this, we work with the next code:

from datasets import DatasetDict, Dataset

# Changing pandas DataFrames into Hugging Face Dataset objects:

dataset_train = Dataset.from_pandas(train_df)

dataset_val = Dataset.from_pandas(val_df)

dataset_test = Dataset.from_pandas(test_df)

# Mix them right into a single DatasetDict

dataset = DatasetDict({

'prepare': dataset_train,

'val': dataset_val,

'take a look at': dataset_test

})

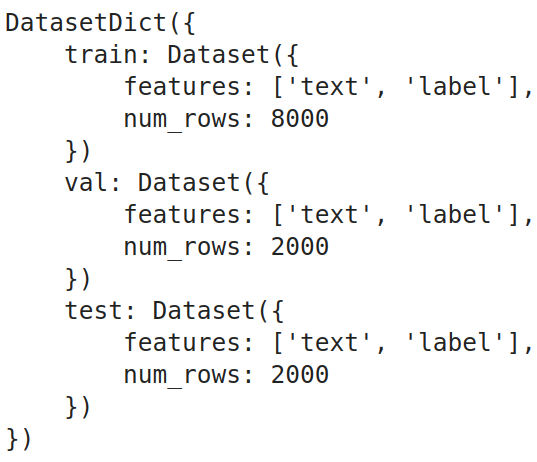

dataset

Code Clarification

- First, we import the DatasetDict class from the datasets library

- Then we convert every of the train_df, test_df, and val_df from Pandas DataFrames to the HuggingFace Dataset kind

- Lastly, we mix all of the prepare, take a look at, and val HuggingFace Dataset, create the ultimate DatasetDict kind variable, and retailer it within the dataset variable

We are able to see from the output that the DatasetDict comprises 3 Datasets, that are the prepare, take a look at, and validation datasets. The place every of those datasets comprises solely 2 columns: one is textual content, and the opposite is the label.

Right here, in our dataset, the proportion of every class is similar. In sensible instances, this may solely typically be true. So when the courses are imbalanced, we have to take correct measures so the LLM doesn’t give extra significance to the label containing extra examples. For this, we calculate the category weights.

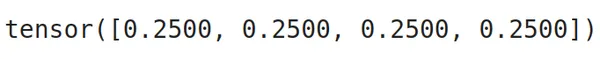

Class weights inform us how a lot significance we should give to every class; the extra class weights there are, the extra significance there’s to the category. If we’ve got an imbalanced dataset, we could present extra class weight to the label, having fewer examples, thus giving extra significance to it. To get these class weights, we will take the inverse of the proportion of sophistication labels (worth counts) of the dataset. The code for this will probably be:

import torch

class_weights=(1/train_df.label.value_counts(normalize=True).sort_index()).tolist()

class_weights=torch.tensor(class_weights)

class_weights=class_weights/class_weights.sum()

class_weights

Code Clarification

- So, we begin by taking the values of the inverse of worth counts of the category labels

- Then, we convert these from a listing kind to a torch tensor kind

- We then normalize the class_weights by dividing them by the sum of class_weights

We are able to see from the output that the category weights are equal for all of the courses; it’s because all of the courses have the identical variety of examples.

Additionally learn: 3 Methods to Use Llama 3 [Explained with Steps]

Mannequin Loading -Quantization

On this part, we are going to obtain and put together the mannequin for the coaching. The primary is to obtain the mannequin. We can not work with the complete mannequin as a result of we’re coping with a small GPU; therefore, we are going to quantify it. The code for this will probably be:

from transformers import BitsAndBytesConfig, AutoModelForSequenceClassification

quantization_config = BitsAndBytesConfig(

load_in_4bit = True,

bnb_4bit_quant_type="nf4",

bnb_4bit_use_double_quant = True,

bnb_4bit_compute_dtype = torch.bfloat16

)

model_name = "meta-llama/Meta-Llama-3-8B"

mannequin = AutoModelForSequenceClassification.from_pretrained(

model_name,

quantization_config=quantization_config,

num_labels=4,

device_map='auto'

)Code Clarification

- We import the BitsAndBytesConfig and the AutoModelForSequenceClassification courses from the transformers library.

- We should outline a quantization config to create a quantization for the mannequin. For this, we are going to create an occasion of BitsAndBytesConfig and provides it completely different quantization parameters.

- load_in_4bit: That is set to True, which tells that we want to quantize the mannequin to a 4-bit precision

- bnb_4bit_quant_type: That is the kind of quantization we want to work with, and we will probably be going with Regular Float, aka NF4, which is the advisable one

- bnb_4bit_compute_dtype: That is the info kind through which the GPU computations will probably be carried out. It will often be both torch.float32 / torch.float16 / torch.bfloat16, in our instance, based mostly on the GPU, we go along with torch.bfloat16

- bnb_4bit_use_double_quant: When set to True, it is going to additional scale back the reminiscence footprint by quantizing the quantization constants

- Subsequent, we offer the mannequin identify that we are going to finetune to the model_name variable, which right here is Meta’s newly launched Llama 3 8B

- Then, we create an occasion of the AutoModelForSequenceClassification class and provides it the mannequin identify and the quantization config

- We additionally used one other variable referred to as the num_labels and set it to 4. What AutoModelForSequenceClassification does is take away the final layer of the Llama 3 LLM and change it with a linear layer

- We’re setting the output of this linear layer to 4; it’s because there are 4 class labels in our dataset

- Lastly, we set the device_map to “auto,” so the mannequin will get loaded to the GPU

So operating the above will obtain the Llama 3 8B Giant Language Mannequin from the HuggingFace hub, quantize it based mostly on the quantization_config that we’ve got offered to it, after which change the output head of the LLM with a linear head with 4 neurons for the output and pushed the mannequin to the GPU. Subsequent, we are going to create a LoRA config for the mannequin to coach solely a subset of parameters. The code for this will probably be:

LoRA Configuration

from peft import LoraConfig, prepare_model_for_kbit_training, get_peft_model

lora_config = LoraConfig(

r = 16,

lora_alpha = 8,

target_modules = ['q_proj', 'k_proj', 'v_proj', 'o_proj'],

lora_dropout = 0.05,

bias="none",

task_type="SEQ_CLS"

)

mannequin = prepare_model_for_kbit_training(mannequin)

mannequin = get_peft_model(mannequin, lora_config)Code Clarification

- We begin by importing LoraConfig, prepare_model_for_kbit_training, and get_peft_model from peft library

- Then, we instantiate a LoraConfig class and provides it completely different parameters, which embody

- r: This defines the rank of the matrix of parameters that we are going to be coaching. We’ve set this to 16

- lora_alpha: It is a hyperparameter for the LoRA-based coaching. That is often set to half of the worth of r

- target_modules: It is a checklist the place we specify the place we must be including the LoRA-based coaching. For this, we select all the eye layers, just like the Okay, Q, V, and the Output Projections

- lora_dropout: This we set to 0.05, which can randomly drop the neurons in order that overfitting doesn’t occur

- bias: If set to true, then even the bias time period will probably be added together with the weights for coaching

- task_type: As a result of we’re coaching for a classification dataset and have added the classification layer to the LLM, we will probably be maintaining this “SEQ_CLS”

- Then, we name the prepare_model_for_kbit_training() operate, to which we give the mannequin. This operate preprocess the quantized mannequin for coaching

- Lastly, we name the get_peft_model() operate by giving it each the mannequin and the LoraConfig

Working this, the get_peft_model will take the mannequin and put together it for coaching with a PEFT methodology, just like the LoRA on this case, by wrapping the mannequin and the LoRA Configuration.

Mannequin Testing – Pre Coaching

On this part, we are going to take a look at the Llama 3 mannequin on the take a look at knowledge earlier than the mannequin has been educated. To do that, we are going to first obtain the tokenizer. The code for this will probably be:

from transformers import AutoTokenizer

model_name = "meta-llama/Meta-Llama-3-8B"

tokenizer = AutoTokenizer.from_pretrained(model_name, add_prefix_space=True)

tokenizer.pad_token_id = tokenizer.eos_token_id

tokenizer.pad_token = tokenizer.eos_tokenCode Clarification

- Right here, we import the AutoTokenizer class from the transformers library

- We instantiate a tokenizer by calling the from_pretrained() operate of the AutoTokenizer class and passing it the mannequin identify

- We then set the pad_token of the tokenizer to the eos_token, and the identical goes for the pad_token_id

mannequin.config.pad_token_id = tokenizer.pad_token_id

mannequin.config.use_cache = False

mannequin.config.pretraining_tp = 1Subsequent, we even edit the mannequin configuration by setting the pad token ID of the mannequin to the pad token ID of the tokenizer and never utilizing the cache. Now, we are going to give our take a look at knowledge to the mannequin and gather the outputs:

sentences = test_df.textual content.tolist()

batch_size = 32

all_outputs = []

for i in vary(0, len(sentences), batch_size):

batch_sentences = sentences[i:i + batch_size]

inputs = tokenizer(batch_sentences, return_tensors="pt",

padding=True, truncation=True, max_length=512)

inputs = {ok: v.to('cuda' if torch.cuda.is_available() else 'cpu') for ok, v in inputs.objects()}

with torch.no_grad():

outputs = mannequin(**inputs)

all_outputs.append(outputs['logits'])

final_outputs = torch.cat(all_outputs, dim=0)

test_df['predictions']=final_outputs.argmax(axis=1).cpu().numpy()Code Clarification

- First, we convert the weather within the textual content column of the take a look at DataFrame into a listing of sentences and retailer them within the variable sentences.

- Then, we outline a batch dimension to signify the mannequin’s inputs in a batch; right here, we set this dimension to 32.

- We then create an empty checklist of all_outputs to retailer the outputs that the mannequin will generate

- We begin iterating by the sentence variable with the step dimension set to the batch dimension we’ve got outlined.

- So, in every iteration, we tokenize the enter sentences in batches.

- Then, we transfer these tokenized sentences to the gadget on which we run the mannequin, both a GPU or CPU.

- Lastly, we carry out the mannequin inference by passing the inputs to the mannequin and appending the output logits to the all_outputs checklist. We then concatenate all these outputs to type a last output tensor.

Working this code will retailer the mannequin ends in a variable. We are going to add these predictions to the take a look at DataFrame in a brand new column. We take the argmax of every output; this provides us the label that has the very best likelihood for every output within the final_outputs checklist. Now, we have to consider the output generated by the LLM, which we will do by the code beneath:

from sklearn.metrics import accuracy_score, confusion_matrix

from sklearn.metrics import balanced_accuracy_score, classification_report

def get_metrics_result(test_df):

y_test = test_df.label

y_pred = test_df.predictions

print("Classification Report:")

print(classification_report(y_test, y_pred))

print("Balanced Accuracy Rating:", balanced_accuracy_score(y_test, y_pred))

print("Accuracy Rating:", accuracy_score(y_test, y_pred))

get_metrics_result(test_df)

Code Clarification

- We begin by importing the accuracy_score, balanced_accuracy_score, and classification_report from the sklearn library.

- Then we outline a operate referred to as get_metrics_result(), which takes in a dataframe and outputs the outcomes.

- On this operate, we first begin by storing the predictions and precise labels in a variable.

- Then, we give these precise and predicted values to the classification report, accuracy_score, and balance_accuracy_score, and print them.

- The balance_accuracy_score is beneficial when coping with imbalanced knowledge.

Working that has produced the next outcomes. We are able to see that we get an accuracy of 0.23, which may be very low. The mannequin’s precision, recall, and f1-score are very low, too; they don’t even attain a share above 50%. Testing them after coaching the mannequin will give us an understanding of how nicely it’s educated.

Earlier than we begin coaching, we have to preprocess the info earlier than sending it to the mannequin. For this, we work with the next code:

def data_preprocesing(row):

return tokenizer(row['text'], truncation=True, max_length=512)

tokenized_data = dataset.map(data_preprocesing, batched=True,

remove_columns=['text'])

tokenized_data.set_format("torch")Code Clarification

- We outline a operate referred to as data_preprocessing(), which expects a row of knowledge

- Inside it, we move the textual content content material of that row to the tokenizer with truncation set to true and max size set to 512 tokens

- Then we create the tokenized_data, by mapping this operate to the dataset that we’ve got created and eradicating the textual content column after mapping as a result of we solely want the tokens which the mannequin expects

- Lastly, we convert these tokenized knowledge to torch format

Now every Dataset within the datasetdict comprises three options/columns, i.e. labels, input_ids, and attention_masks. The input_ids and attention_masks are produced for every textual content with the above preprocessing operate. We are going to want an information collator for batch processing of knowledge whereas coaching. For this, we work with the next code:

from transformers import DataCollatorWithPadding

collate_fn = DataCollatorWithPadding(tokenizer=tokenizer)- Right here, we import the DataCollatorWithPadding class from the transformers library

- Then, we instantiate this class by giving it the tokenizer

- This collate_fn will pad the batch of inputs to a size equal to the utmost enter size in that batch

It will be sure that all of the inputs within the batch have the identical size, which will probably be required for quicker coaching. So, we uniformly pad the inputs to the longest sequence size utilizing a particular token just like the pad token, thus permitting simultaneous batch processing.

Additionally learn: Easy methods to Run Llama 3 Domestically?

Finetune Llama 3: Mannequin Coaching and Publish-Coaching Analysis

Earlier than we begin coaching, we’d like an error metric to guage it. The default error metric for the Giant Language Mannequin is the damaging log-likelihood loss. However right here, as a result of we’re modifying the LLM to make it a sequence classification software, we have to redefine the error metric that we have to take a look at the mannequin whereas coaching:

def compute_metrics(evaluations):

predictions, labels = evaluations

predictions = np.argmax(predictions, axis=1)

return {'balanced_accuracy' : balanced_accuracy_score(predictions, labels),

'accuracy':accuracy_score(predictions,labels)}- Right here, we outline a operate compute_metrics, which takes in a tuple containing the predictions and labels

- Then, from the given predictions, we extract the indices of those which have the very best likelihood by the np.argmax() operate

- Lastly, we return a dictionary containing the balanced accuracy and the unique accuracy scores

As a result of we’re going with a customized metric, we even outline a customized coach for coaching our LLM, which is required as a result of we’re working with class weights right here. For this, the code will probably be

class CustomTrainer(Coach):

def __init__(self, *args, class_weights=None, **kwargs):

tremendous().__init__(*args, **kwargs)

if class_weights will not be None:

self.class_weights = torch.tensor(class_weights,

dtype=torch.float32).to(self.args.gadget)

else:

self.class_weights = None

def compute_loss(self, mannequin, inputs, return_outputs=False):

labels = inputs.pop("labels").lengthy()

outputs = mannequin(**inputs)

logits = outputs.get('logits')

if self.class_weights will not be None:

loss = F.cross_entropy(logits, labels, weight=self.class_weights)

else:

loss = F.cross_entropy(logits, labels)

return (loss, outputs) if return_outputs else loss

Code Clarification

- Right here, we outline a customized coach class that inherits from the Coach class from HuggingFace

- This class implements an init operate, the place we outline that if class_weights are offered, then assign the class_weights as one of many occasion variables, else assign it to None

- Earlier than assigning, we convert the class_weights to torch.tensor and alter the dtype to float32

- Then we outline the compute_loss operate of the Coach class, which is required for it to carry out backpropagation

- On this, first, we extract the labels from the inputs and convert them to knowledge kind lengthy

- Then we give the inputs to the mannequin, which takes in these inputs and generates the chances for the courses; we take the logits from these outputs

- Then we name the cross entropy loss as a result of we’re coping with a number of labels classification and provides it the mannequin output logits and labels. And if class weights are current, we even present these to the mannequin

- Lastly, we return these losses and outputs as required

Coaching Arguments

Now, we are going to outline our Coaching Arguments. The code for this will probably be beneath

training_args = TrainiAgrumentsngArguments(

output_dir="sentiment_classification",

learning_rate = 1e-4,

per_device_train_batch_size = 8,

per_device_eval_batch_size = 8,

num_train_epochs = 1,

logging_steps=1,

weight_decay = 0.01,

evaluation_strategy = 'epoch',

save_strategy = 'epoch',

load_best_model_at_end = True,

report_to="none"

)Code Clarification

- We instantiate an object of the TrainingArguments class. To this, we move parameters like

- output_dir: That is the place we want to save the mannequin. We are able to present the trail right here

- learning_rate: Right here, we give the training charge to be utilized whereas coaching

- per_device_train_batch_size: Right here, and we set the batch dimension for the coaching knowledge whereas coaching

- per_device_eval_batch_size; Right here, we set the analysis batch dimension for the take a look at/analysis knowledge

- num_train_epochs: Right here, we give the variety of coaching epochs we wish in coaching the LLM

- logging_steps: Right here, we inform how typically to log the outcomes

- weight_decay: By how a lot ought to the weights decay

- save_strategy: That is set to epoch, which tells that for each epoch, the mannequin weights are saved

- evaluation_strategy: That is set to epoch, which tells that for each epoch, analysis of the mannequin is carried out

- load_best_model_at_end: Giving it a worth of True will load the mannequin with one of the best parameters that present one of the best outcomes

Passing Object to Coach

It will create our TrainingArguments object. Now, we’re able to move it to the Coach we created. The code for this will probably be beneath

coach = CustomTrainer(

mannequin = mannequin,

args = training_args,

train_dataset = tokenized_datasets['train'],

eval_dataset = tokenized_datasets['val'],

tokenizer = tokenizer,

data_collator = collate_fn,

compute_metrics = compute_metrics,

class_weights=class_weights,

)

train_result = coach.prepare()Code Clarification

- We begin by creating an object of the CustomTrainer() class that we’ve got created earlier

- To this, we give the mannequin that’s the Llama 3 with customized Sequential Head, the coaching arguments

- We even move within the tokenized coaching knowledge and the analysis knowledge together with the tokenizer

- We even give the collator operate in order that batch processing takes place whereas the LLM is being educated

- Lastly, we give the metric operate that we’ve got created and the category weights, which can assist if we’ve got knowledge with imbalanced labels

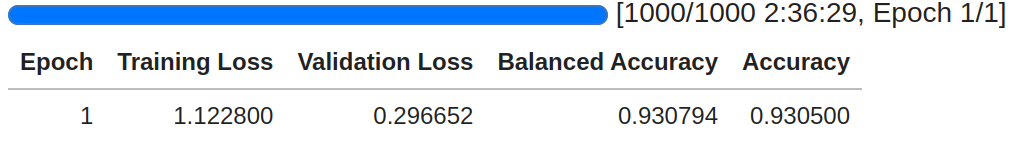

We’ve now created the coach object. We now name the .prepare() operate of the coach object to start out the coaching course of and retailer the ends in the train_result

Code Clarification

- Above, we test that the coaching has taken place for 1000 steps. It’s because we’ve got 8000 coaching knowledge, and every time, in every step, we ship 8 samples; therefore we’ve got a complete of 1000 steps

- The coaching happened for two hours and 36 minutes for the mannequin to iterate over your complete coaching knowledge for one epoch

- We are able to see the coaching lack of the mannequin is 1.12, and the validation loss is 0.29

- The mannequin is exhibiting a powerful consequence with the validation knowledge, with an accuracy of 93%

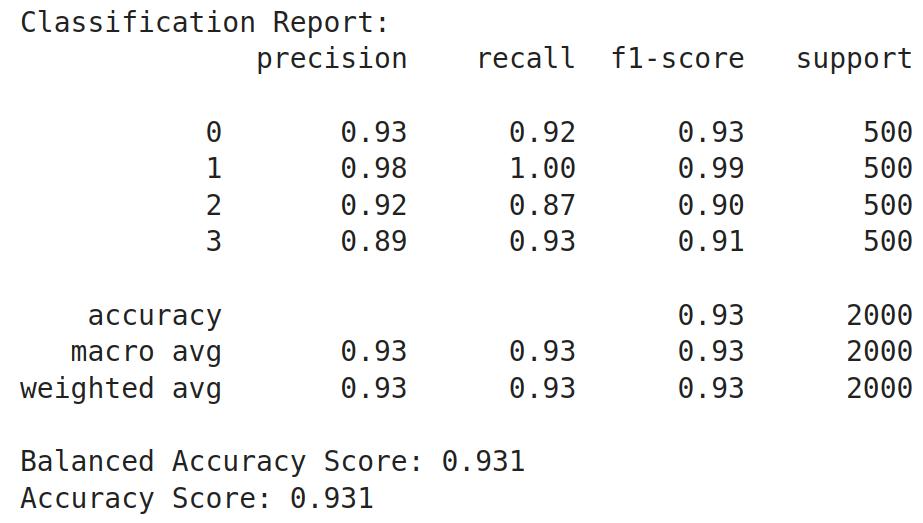

Now, allow us to attempt to carry out evaluations to check the newly educated mannequin on the take a look at knowledge:

def generate_predictions(mannequin,df_test):

sentences = df_test.textual content.tolist()

batch_size = 32

all_outputs = []

for i in vary(0, len(sentences), batch_size):

batch_sentences = sentences[i:i + batch_size]

inputs = tokenizer(batch_sentences, return_tensors="pt",

padding=True, truncation=True, max_length=512)

inputs = {ok: v.to('cuda' if torch.cuda.is_available() else 'cpu')

for ok, v in inputs.objects()}

with torch.no_grad():

outputs = mannequin(**inputs)

all_outputs.append(outputs['logits'])

final_outputs = torch.cat(all_outputs, dim=0)

df_test['predictions']=final_outputs.argmax(axis=1).cpu().numpy()

generate_predictions(mannequin,test_df)

get_performance_metrics(test_df)

Code Clarification

- Right here, we create a operate similar to the one which we’ve got created

- On this operate, we convert the textual content column from the take a look at dataframe into a listing of sentences after which run a for loop with a step dimension equal to the batch dimension

- On this for loop, we take the batch variety of sentences, ship them to the tokenizer to tokenize them, and push them to the GPU

- Then, we insert this checklist of tokens into the mannequin and get the checklist of possibilities from it

- Lastly, we get the index of the very best likelihood in every output and save the index again within the take a look at dataframe

- This test_df is given again to the get_performance_metric operate which takes on this dataframe and outputs the outcomes

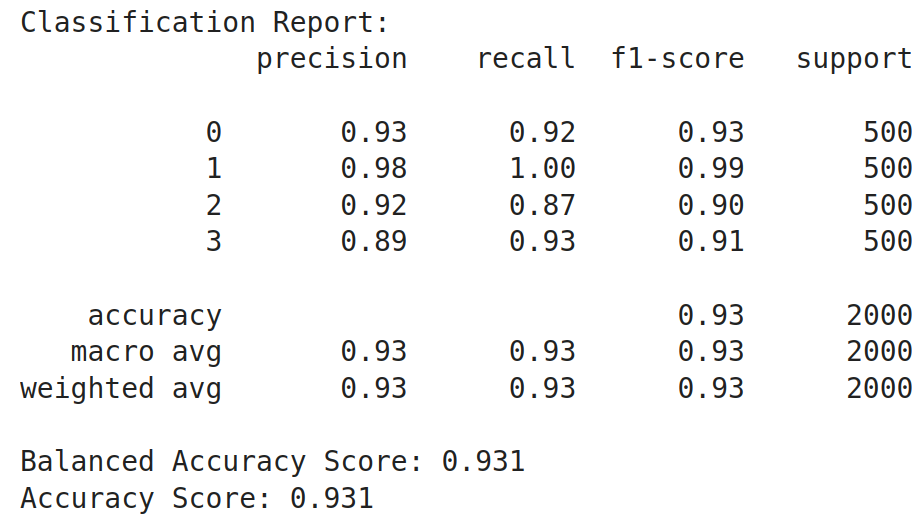

Working this code has generated the next outcomes. We see that there’s a nice enhance within the accuracy of the mannequin. Different metrics like precision, recall, and f1-score have elevated too from their preliminary values. The general accuracy has elevated from 0.23 earlier than coaching to 0.93 after coaching, which is a 0.7 i.e. 70% enchancment within the mannequin after coaching it. From this, we will get an perception that Giant Language Fashions are very a lot able to being employed as sequence classifiers

Conclusion

In conclusion, fine-tuning giant language fashions (LLMs) (finetune Llama 3) for sequence classification includes a number of detailed steps, from making ready the dataset to quantizing the mannequin for environment friendly coaching on restricted {hardware}. By using varied libraries from HuggingFace and implementing strategies reminiscent of Immediate Engineering and LoRA configurations, it’s doable to successfully prepare these fashions for particular duties reminiscent of information classification. This information has demonstrated your complete course of, from preliminary setup and knowledge preprocessing to mannequin coaching and analysis, highlighting the flexibility and energy of LLMs in pure language processing duties.

Key Takeaways

- Giant Language Fashions (finetune Llama 3) are pre-trained on in depth datasets and are able to producing and understanding advanced textual content

- Whereas helpful, immediate engineering alone could not suffice for all classification duties; mannequin fine-tuning can present higher efficiency

- Correctly loading, splitting, and balancing datasets are basic steps earlier than mannequin coaching to make sure correct and truthful mannequin efficiency

- Defining customized error metrics and coaching loops is important for dealing with particular necessities like class weights in sequence classification duties

- Pre-training and post-training evaluations utilizing metrics reminiscent of accuracy and balanced accuracy present insights into mannequin efficiency and the effectiveness of the coaching course of

The media proven on this article are usually not owned by Analytics Vidhya and is used on the Creator’s discretion.

Continuously Requested Questions

A. Giant Language Fashions are AI programs educated on huge quantities of textual content knowledge to know and generate human-like language.

A. Sequence Classification is a job the place a sequence of textual content is categorized into predefined courses or labels.

A. Immediate Engineering may not be dependable as a result of it relies upon closely on the immediate’s construction and might lack consistency.

A. Quantization reduces fashions’ reminiscence footprint, making it possible to run them on {hardware} with restricted assets, like low-RAM GPUs.

A. Class imbalance might be addressed by calculating and making use of class weights, giving extra significance to much less frequent courses throughout coaching.