What’s load testing and why does it matter?

Load testing is a essential course of for any database or knowledge service, together with Rockset. By doing load testing, we purpose to evaluate the system’s habits below each regular and peak situations. This course of helps in evaluating essential metrics like Queries Per Second (QPS), concurrency, and question latency. Understanding these metrics is important for sizing your compute sources accurately, and guaranteeing that they’ll deal with the anticipated load. This, in flip, helps in reaching Service Stage Agreements (SLAs) and ensures a easy, uninterrupted consumer expertise. That is particularly essential for customer-facing use circumstances, the place finish customers count on a handy guide a rough consumer expertise. Load testing is typically additionally referred to as efficiency or stress testing.

“53% of visits are more likely to be deserted if pages take longer than 3 seconds to load” — Google

Rockset compute sources (referred to as digital cases or VIs) come in numerous sizes, starting from Small to 16XL, and every measurement has a predefined variety of vCPUs and reminiscence out there. Selecting an applicable measurement will depend on your question complexity, dataset measurement and selectivity of your queries, variety of queries which are anticipated to run concurrently and goal question efficiency latency. Moreover, in case your VI can be used for ingestion, it is best to consider sources wanted to deal with ingestion and indexing in parallel to question execution. Fortunately, we provide two options that may assist with this:

- Auto-scaling – with this characteristic, Rockset will routinely scale the VI up and down relying on the present load. That is essential when you’ve got some variability in your load and/or use your VI to do each ingestion and querying.

- Compute-compute separation – that is helpful as a result of you possibly can create VIs which are devoted solely for operating queries and this ensures that all the out there sources are geared in the direction of executing these queries effectively. This implies you possibly can isolate queries from ingest or isolate totally different apps on totally different VIs to make sure scalability and efficiency.

We suggest doing load testing on at the very least two digital cases – with ingestion operating on the principle VI and on a separate question VI. This helps with deciding on a single or multi-VI structure.

Load testing helps us determine the boundaries of the chosen VI for our explicit use case and helps us decide an applicable VI measurement to deal with our desired load.

Instruments for load testing

With regards to load testing instruments, a number of common choices are JMeter, k6, Gatling and Locust. Every of those instruments has its strengths and weaknesses:

- JMeter: A flexible and user-friendly instrument with a GUI, preferrred for numerous kinds of load testing, however could be resource-intensive.

- k6: Optimized for top efficiency and cloud environments, utilizing JavaScript for scripting, appropriate for builders and CI/CD workflows.

- Gatling: Excessive-performance instrument utilizing Scala, greatest for advanced, superior scripting situations.

- Locust: Python-based, providing simplicity and speedy script growth, nice for simple testing wants.

Every instrument provides a singular set of options, and the selection will depend on the particular necessities of the load take a look at being performed. Whichever instrument you employ, you should definitely learn by the documentation and perceive the way it works and the way it measures the latencies/response instances. One other good tip is to not combine and match instruments in your testing – in case you are load testing a use case with JMeter, keep it up to get reproducible and reliable outcomes which you could share together with your crew or stakeholders.

Rockset has a REST API that can be utilized to execute queries, and all instruments listed above can be utilized to load take a look at REST API endpoints. For this weblog, I’ll give attention to load testing Rockset with Locust, however I’ll present some helpful sources for JMeter, k6 and Gatling as effectively.

Establishing Rockset and Locust for load testing

Let’s say we now have a pattern SQL question that we need to take a look at and our knowledge is ingested into Rockset. The very first thing we normally do is convert that question right into a Question Lambda – this makes it very straightforward to check that SQL question as a REST endpoint. It may be parametrized and the SQL could be versioned and saved in a single place, as a substitute of going forwards and backwards and altering your load testing scripts each time it’s worthwhile to change one thing within the question.

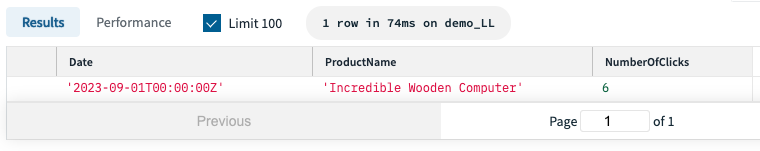

Step 1 – Determine the question you need to load take a look at

In our situation, we need to discover the preferred product on our webshop for a selected day. That is what our SQL question appears to be like like (observe that :date is a parameter which we are able to provide when executing the question):

--top product for a selected day

SELECT

s.Date,

MAX_BY(p.ProductName, s.Rely) AS ProductName,

MAX(s.Rely) AS NumberOfClicks

FROM

"Demo-Ecommerce".ProductStatsAlias s

INNER JOIN "Demo-Ecommerce".ProductsAlias p ON s.ProductID = CAST(p._id AS INT)

WHERE

s.Date = :date

GROUP BY

1

ORDER BY

1 DESC;

Step 2 – Save your question as a Question Lambda

We’ll save this question as a question lambda referred to as LoadTestQueryLambda which is able to then be out there as a REST endpoint:

https://api.usw2a1.rockset.com/v1/orgs/self/ws/sandbox/lambdas/LoadTestQueryLambda/tags/newest

curl --request POST

--url https://api.usw2a1.rockset.com/v1/orgs/self/ws/sandbox/lambdas/LoadTestQueryLambda/tags/newest

-H "Authorization: ApiKey $ROCKSET_APIKEY"

-H 'Content material-Kind: utility/json'

-d '{

"parameters": [

{

"name": "days",

"type": "int",

"value": "1"

}

],

"virtual_instance_id": "<your digital occasion ID>"

}'

| python -m json.instrument

Step 3 – Generate your API key

Now we have to generate an API key, which we’ll use as a approach for our Locust script to authenticate itself to Rockset and run the take a look at. You may create an API key simply by our console or by the API.

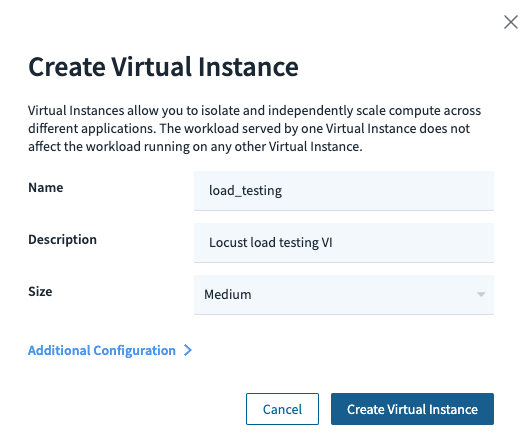

Step 4 – Create a digital occasion for load testing

Subsequent, we want the ID of the digital occasion we need to load take a look at. In our situation, we need to run a load take a look at towards a Rockset digital occasion that’s devoted solely to querying. We spin up an extra Medium digital occasion for this:

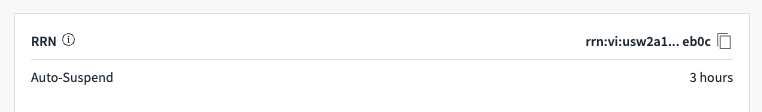

As soon as the VI is created, we are able to get its ID from the console:

Step 5 – Set up Locust

Subsequent, we’ll set up and arrange Locust. You are able to do this in your native machine or a devoted occasion (assume EC2 in AWS).

$ pip set up locust

Step 6 – Create your Locust take a look at script

As soon as that’s performed, we’ll create a Python script for the Locust load take a look at (observe that it expects a ROCKSET_APIKEY atmosphere variable to be set which is our API key from step 3).

We will use the script under as a template:

import os

from locust import HttpUser, activity, tag

from random import randrange

class query_runner(HttpUser):

ROCKSET_APIKEY = os.getenv('ROCKSET_APIKEY') # API secret is an atmosphere variable

header = {"authorization": "ApiKey " + ROCKSET_APIKEY}

def on_start(self):

self.headers = {

"Authorization": "ApiKey " + self.ROCKSET_APIKEY,

"Content material-Kind": "utility/json"

}

self.consumer.headers = self.headers

self.host="https://api.usw2a1.rockset.com/v1/orgs/self" # substitute this together with your area's URI

self.consumer.base_url = self.host

self.vi_id = '<your digital occasion ID>' # substitute this together with your VI ID

@tag('LoadTestQueryLambda')

@activity(1)

def LoadTestQueryLambda(self):

# utilizing default params for now

knowledge = {

"virtual_instance_id": self.vi_id

}

target_service="/ws/sandbox/lambdas/LoadTestQueryLambda/tags/newest" # substitute this together with your question lambda

outcome = self.consumer.publish(

target_service,

json=knowledge

)

Step 7 – Run the load take a look at

As soon as we set the API key atmosphere variable, we are able to run the Locust atmosphere:

export ROCKSET_APIKEY=<your api key>

locust -f my_locust_load_test.py --host https://api.usw2a1.rockset.com/v1/orgs/self

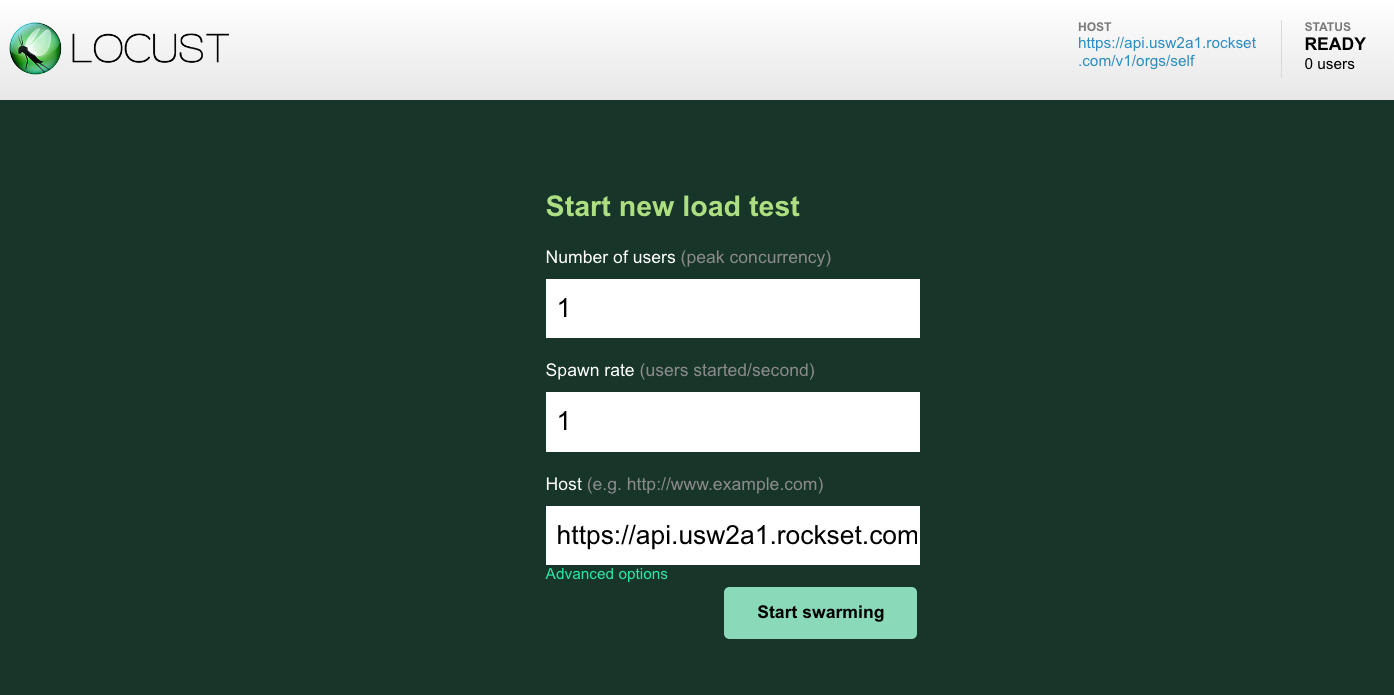

And navigate to: http://localhost:8089 the place we are able to begin our Locust load take a look at:

Let’s discover what occurs as soon as we hit the Begin swarming button:

- Initialization of simulated customers: Locust begins creating digital customers (as much as the quantity you specified) on the fee you outlined (the spawn fee). These customers are cases of the consumer class outlined in your Locust script. In our case, we’re beginning with a single consumer however we’ll then manually enhance it to five and 10 customers, after which go down to five and 1 once more.

- Process execution: Every digital consumer begins executing the duties outlined within the script. In Locust, duties are sometimes HTTP requests, however they are often any Python code. The duties are picked randomly or based mostly on the weights assigned to them (if any). We’ve got only one question that we’re executing (our

LoadTestQueryLambda). - Efficiency metrics assortment: Because the digital customers carry out duties, Locust collects and calculates efficiency metrics. These metrics embody the variety of requests made, the variety of requests per second, response instances, and the variety of failures.

- Actual-time statistics replace: The Locust net interface updates in real-time, displaying these statistics. This consists of the variety of customers presently swarming, the request fee, failure fee, and response instances.

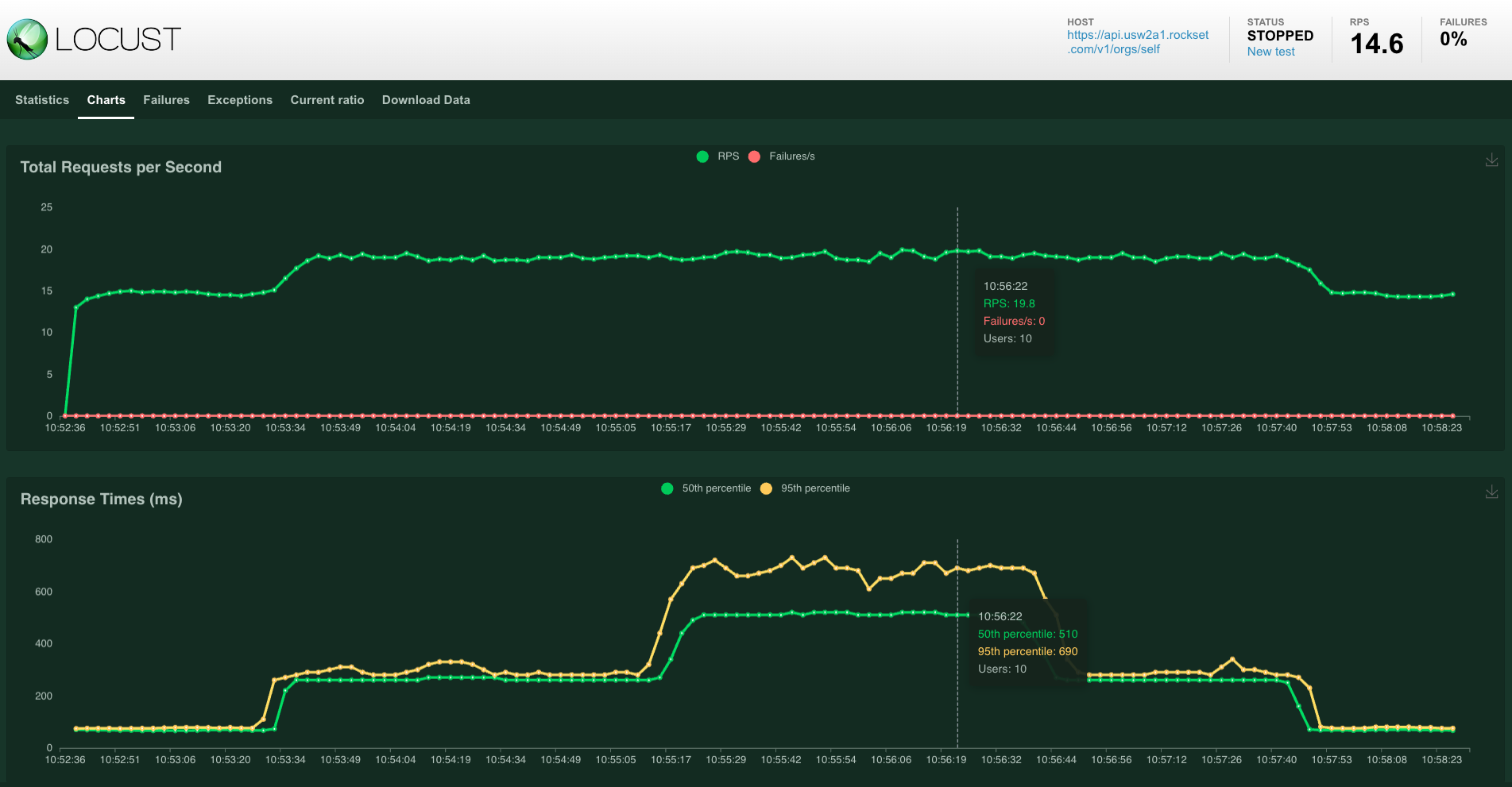

- Take a look at scalability: Locust will proceed to spawn customers till it reaches the overall quantity specified. It ensures the load is elevated step by step as per the desired spawn fee, permitting you to look at how the system efficiency adjustments because the load will increase. You may see this within the graph under the place the variety of customers begins to develop to five and 10 after which go down once more.

- Consumer habits simulation: Digital customers will await a random time between duties, as outlined by the

wait_timewithin the script. This simulates extra practical consumer habits. We didn’t do that in our case however you are able to do this and extra superior issues in Locust like customized load shapes, and so forth. - Steady take a look at execution: The take a look at will proceed operating till you resolve to cease it, or till it reaches a predefined period if you happen to’ve set one.

- Useful resource utilization: Throughout this course of, Locust makes use of your machine’s sources to simulate the customers and make requests. It is essential to notice that the efficiency of the Locust take a look at may rely upon the sources of the machine it is operating on.

Let’s now interpret the outcomes we’re seeing.

Decoding and validating load testing outcomes

Decoding outcomes from a Locust run includes understanding key metrics and what they point out concerning the efficiency of the system below take a look at. Listed below are a number of the foremost metrics supplied by Locust and the right way to interpret them:

- Variety of customers: The full variety of simulated customers at any given level within the take a look at. This helps you perceive the load stage in your system. You may correlate system efficiency with the variety of customers to find out at what level efficiency degrades.

- Requests per second (RPS): The variety of requests (queries) made to your system per second. A better RPS signifies the next load. Examine this with response instances and error charges to evaluate if the system can deal with concurrency and excessive visitors easily.

- Response time: Normally displayed as common, median, and percentile (e.g., ninetieth and 99th percentile) response instances. You’ll seemingly have a look at median and the 90/99 percentile as this provides you the expertise for “most” customers – solely 10 or 1 p.c can have worse expertise.

- Failure fee: The proportion or variety of requests that resulted in an error. A excessive failure fee signifies issues with the system below take a look at. It is essential to research the character of those errors.

Under you possibly can see the overall RPS and response instances we achieved below totally different hundreds for our load take a look at, going from a single consumer to 10 customers after which down once more.

Our RPS went as much as about 20 whereas sustaining median question latency under 300 milliseconds and P99 of 700 milliseconds.

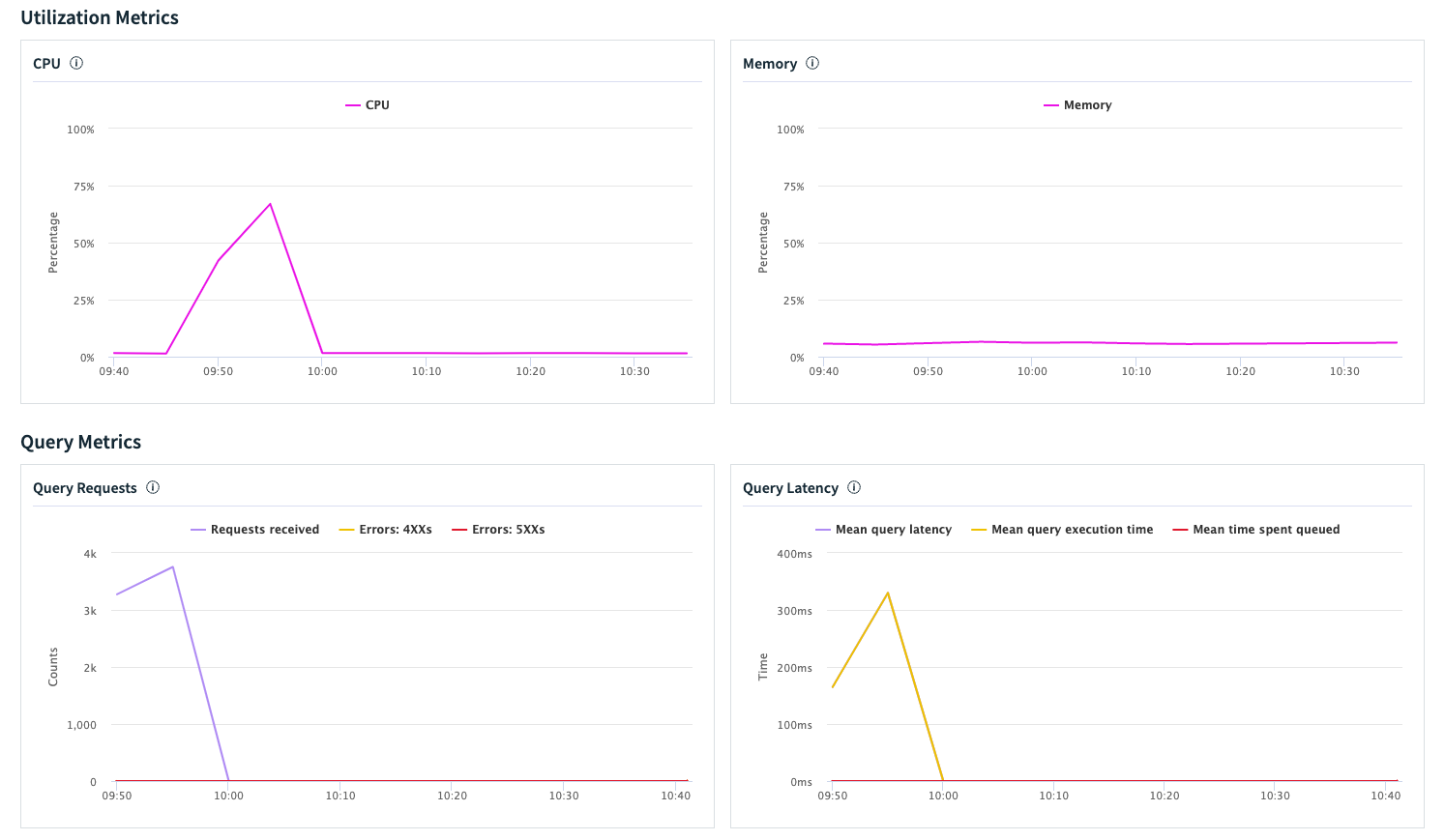

We will now correlate these knowledge factors with the out there digital occasion metrics in Rockset. Under, you possibly can see how the digital occasion handles the load by way of CPU, reminiscence and question latency. There’s a correlation between variety of customers from Locust and the peaks we see on the VI utilization graphs. It’s also possible to see the question latency beginning to rise and see the concurrency (requests or queries per second) go up. The CPU is under 75% on the height and reminiscence utilization appears to be like secure. We additionally don’t see any vital queueing taking place in Rockset.

Aside from viewing these metrics within the Rockset console or by our metrics endpoint, you too can interpret and analyze the precise SQL queries that have been operating, what was their particular person efficiency, queue time, and so forth. To do that, we should first allow question logs after which we are able to do issues like this to determine our median run and queue instances:

SELECT

query_sql,

COUNT(*) as rely,

ARRAY_SORT(ARRAY_AGG(runtime_ms)) [(COUNT(*) + 1) / 2] as median_runtime,

ARRAY_SORT(ARRAY_AGG(queued_time_ms)) [(COUNT(*) + 1) / 2] as median_queue_time

FROM

commons."QueryLogs"

WHERE

vi_id = '<your digital occasion ID>'

AND _event_time > TIMESTAMP '2023-11-24 09:40:00'

GROUP BY

query_sql

We will repeat this load take a look at on the principle VI as effectively, to see how the system performs ingestion and runs queries below load. The method can be the identical, we might simply use a distinct VI identifier in our Locust script in Step 6.

Conclusion

In abstract, load testing is a crucial a part of guaranteeing the reliability and efficiency of any database resolution, together with Rockset. By deciding on the precise load testing instrument and organising Rockset appropriately for load testing, you possibly can acquire invaluable insights into how your system will carry out below numerous situations.

Locust is simple sufficient to get began with shortly, however as a result of Rockset has REST API help for executing queries and question lambdas, it’s straightforward to hook up any load testing instrument.

Bear in mind, the objective of load testing isn’t just to determine the utmost load your system can deal with, but in addition to grasp the way it behaves below totally different stress ranges and to make sure that it meets the required efficiency requirements.

Fast load testing ideas earlier than we finish the weblog:

- At all times load take a look at your system earlier than going to manufacturing

- Use question lambdas in Rockset to simply parametrize, version-control and expose your queries as REST endpoints

- Use compute-compute separation to carry out load testing on a digital occasion devoted for queries, in addition to in your foremost (ingestion) VI

- Allow question logs in Rockset to maintain statistics of executed queries

- Analyze the outcomes you’re getting and evaluate them towards your SLAs – if you happen to want higher efficiency, there are a number of methods on the right way to deal with this, and we’ll undergo these in a future weblog.

Have enjoyable testing 💪

Helpful sources

Listed below are some helpful sources for JMeter, Gatling and k6. The method is similar to what we’re doing with Locust: it’s worthwhile to have an API key and authenticate towards Rockset after which hit the question lambda REST endpoint for a selected digital occasion.